Share on:

Computational Creativity (part 2)

- 4 minutes read - 671 wordsHow an AI muse helps me write ஃ

For those uninitiated, robots can now write books. Not very good books. Actually, they can barely write coherent essays. If I were grading them in high school English, they’d all get D’s at best.

Typically, these high school dropout robots are made using neural network transformer models. However, technology has now nearly surpassed the level of infinite monkeys on typewriters abilities.

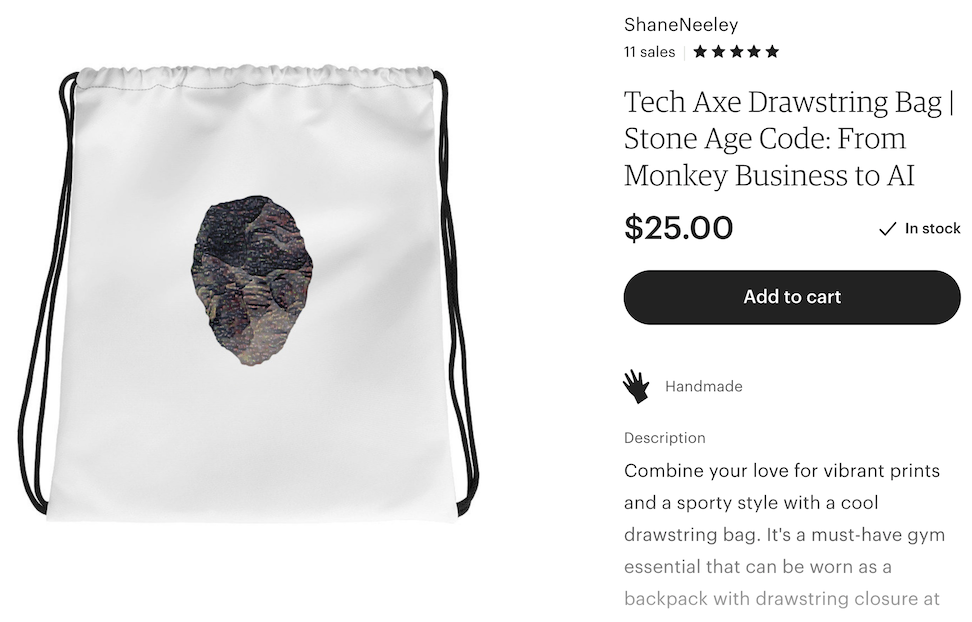

In writing Stone Age Code, I made heavy use of AI. It got a little expensive, and I plan on asking AWS for some free computing credits. If they hook me up, I’ll show interested readers of my book how to rent GPUs and do other things on AWS.

Sponsor

365 Data Science — Courses for the beginner data scientist, data engineer and analyst.

I used transformer models (mainly GPT-2 large, some XLNET), to:

- Write “Robo-Excerpts”: funny passages and asides in the book.

- Write Acclaim for my Kindle store book description page.

- Write my Author Bio.

- Write Tweets to gain a Twitter following (didn’t work, I still have just 30 followers).

- Inspire the actual writing in the book.

- Write Mailing List emails (just kidding y’all, those are really me)

- Entertain me during the grueling process of publishing.

There’s been a lot of talk about OpenAI’s GPT-3 lately. Well, I’m not that cool, I don’t have access to it. Actually, I can use GPT-3 from other people’s products, but I can’t train it myself. Call me egotistical, but I want my robots to be like me, and so I have to train them to be.

With the lovely Python package simpletranformers, PyTorch, and AWS EC2, I can train a GPT-2 model however I like. Thank you to the creator and supporters of that tool.

I gained a lot of experience with simpletranformers and PyTorch through projects at work where we used BERT to do multi-label classification on medical texts. Out of a handful of packages tried, as well as using huggingface itself, I found this wrapper to be the easiest to get running.

To train these robots, I slogged through the generation of unique datasets consisting of:

- My own writing over the years; notes and stand-up jokes.

- My book itself.

- My Kindle Highlights (years of favorited passages by other authors)

- Bio sections from popular authors.

- Acclaim from popular books.

Training the robot like this made it extremely unique. It sounded just like me, my own little buddy clone. It sounded like my favorite authors (I had to be careful not to plagiarize anything, because if you train with enough epochs, it will copy people exactly). It sounded like real acclaim.

What this did for me was create an inspiring muse. I still had to heavily cherry-pick (see ode to cherry picking from computational creativity part 1), but it often provided the nudges that I needed as an author. I can’t say it made writing easier, because the coding and sorting through junk took a lot of work. Though it certainly made my book unique. Thanks robot. I’m excited to see how AI improves again in 2021, 2022, and on, so I can keep a good muse in my pocket.

In the final section of the book, I have random Robo-Excerpts: passages AI generated that really got my goat. Here is one, with my prompt in bold:

A sapien, a denisovan, an erectus and a habilis walk into a bar. One says “hiya, what are u doing?” The other says “hiya, where are u from?” The drunkard says “From Canada!” and the host responds, “Oh, you’re from Saskatchewan.” The two sit back down and drink and smoke until dawn. After a while, the host wonders why they’re still talking about Saskatchewan, and they may start talking about how their home state is the best in the world.

This post was sponsored by 365 Data Science. This program is the best learning solution for beginner data scientists.

Full Data Science Course

Share on: